From our smart home management to the execution of business tasks in offices, everything is automated. Now, imagine cars that drive themselves, navigate traffic, make decisions instantly, and reduce accidents. We are not talking about automatic cars. Let's think one step ahead. We here mention the new tech reality of 2026 with self-driving cars backed by artificial intelligence.

For startups and founders in the United States, developing a self-driving car software brings a massive business opportunity. The global market for autonomous driving software is projected to reach $136 billion by 2046. Even North America dominates the market, with 39% growth driven by higher R&D investments from Tesla, Waymo, and GM.

Now, many founders definitely think about investing in AI-powered autonomous car fleet software development. But, how to build a self driving car software in USA, and what are the requirements for self-driving car software? We have elaborated an in-depth roadmap to build autonomous car software, market insights, and cost estimates, so you can make informed decisions. This guide will help you position your company at the forefront of innovation in 2026 across the USA market.

What Is Self Driving Car Software?

Self-driving car software is a complex software stack powered by artificial intelligence. It acts as the "brain" of an autonomous vehicle, processes data from sensors (cameras, LiDAR, radar) to analyze surroundings, map the environment, and plan paths. This autonomous car software controls vehicle actuators for steering, braking, and acceleration, and allows navigation with lower or no human intervention.

Companies that make software for self driving cars specialize in creating these intelligent systems to reduce accidents and improve road safety. Their autonomous car software developers integrate robotics, computer vision, and machine learning in self-driving cars software development. AI-driven self-driving software enhances efficiency, reduces human error, and opens possibilities for fleet operations, ride-sharing apps, and mobility services.

Read More: Top AI Trends Businesses Must Follow in 2026

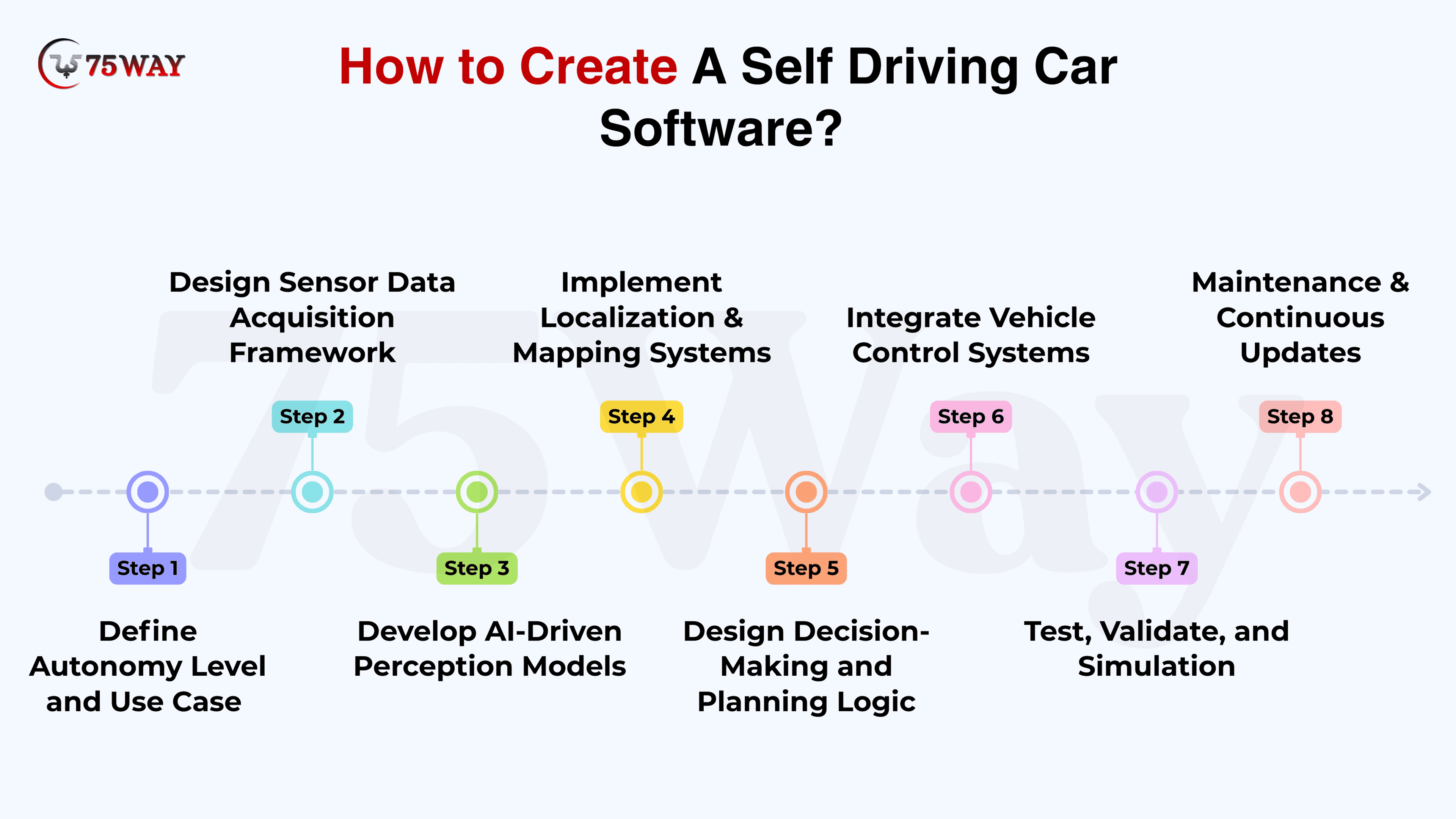

How to Create Autonomous Car Software in 2026?

The process of developing self-driving car software requires AI, sensors, and real-time data processing, so autonomous vehicles can navigate safely. Startups and founders must approach autonomous car software development systematically, from defining autonomy levels to continuous testing and launch. Each step requires strategy, the right technology, and precise planning to build a reliable system that aligns with market demands.

Define Autonomy Level and Use Case

Every autonomous vehicle initiative depends on early decisions about the autonomy level and the Operational Design Domain (ODD). These decisions determine system responsibility, software depth, sensor selection, and safety accountability. Autonomy levels, defined by SAE J3016 from Level 0 to Level 5, describe the level of driving control ownership, whereas ODD defines environmental and operational boundaries for safe software behavior.

Autonomy Levels: Autonomy levels under SAE J3016 explain how driving responsibility shifts from humans to software. Level 0 of developing self-driving apps assigns full control to the driver, Level 1 offers basic assistance, and Level 2 manages steering and speed together. Moreover, Level 3 handles driving conditionally; Level 4 operates independently within geofenced areas; and Level 5 enables full automation everywhere.

Level 0–1 Systems: Level 0 and Level 1 systems depend on continuous human control and supervision. Self-driving car software development integrates alerts, warnings, braking assistance, or steering input. These levels suit early deployment, simpler validation, reduced sensor requirements, and lower regulatory exposure for consumer-focused autonomous features.

Level 2–3 Capability: Level 2 and Level 3 introduce partial to conditional automation across defined scenarios. The autonomous car software manages steering, acceleration, and braking, yet human intervention remains essential. These levels demand advanced perception, driver monitoring, and controlled ODDs, such as highways or traffic-congestion environments.

Level 4–5 Scope: Level 4 and Level 5 represent high to full automation with minimal or no human involvement. Level 4 operates within strict ODD boundaries, like robotaxi zones or parking facilities. Level 5 removes geographic or environmental limits, requiring the highest software intelligence and redundancy.

ODD Use Cases: The Operational Design Domain (ODD) connects autonomy levels to real-world deployment scenarios. Highway pilots emphasize long-range sensing and lane logic as urban robo-taxis require dense perception and pedestrian awareness. Further, valet parking focuses on low-speed precision, while driver-assist features prioritize safety alerts and response timing.

Design a Robust Sensor Data Acquisition Framework

Before development, a self-driving car software design is created, depending on the trusted real-time sensor flows across the vehicle. A proper data acquisition framework integrates cameras, LiDAR, radar, ultrasonic sensors, GPS, and IMU into a single, coordinated system. This model offers perception accuracy to drive decisions, safety assurance, fleet scalability, and reliable autonomous behavior across diverse operating conditions in the USA.

Layered Architecture: A layered data acquisition framework separates sensor input, edge processing, middleware communication, and application storage. Cameras, LiDAR, radar, and positioning units feed edge devices such as Nvidia Jetson for preprocessing, timestamping, and control tasks. You can use middleware platforms such as ROS 2 or DDS to reliably distribute data across software modules.

Time Synchronization: Time alignment across sensors ensures each perception frame represents the same real-world moment. Precision Time Protocol and GPS-based clocks can be used to establish a shared time reference. Hardware triggers capture sensors simultaneously, and software timestamps align asynchronous streams, preventing perception conflicts during vehicle movement.

Spatial Calibration: This factor is essential in self-driving software development to align all sensors within a unified vehicle coordinate system. Intrinsic calibration corrects lens distortion and depth errors inside individual sensors. Extrinsic calibration defines the relative positioning of cameras, LiDAR, and radar so that detected objects appear consistent across all perception outputs.

Fault Tolerance: Fault tolerance prevents perception reliability during sensor degradation or failure. Redundant combinations of radar, LiDAR, and cameras preserve environmental awareness in rain, fog, or partial obstruction. Diagnostic logic in autonomous vehicle software identifies abnormal readings, and validity checks compare sensor outputs with maps and positioning data to confirm them.

Data Throughput: High sensor data volumes demand controlled throughput across the software pipeline. Zero-copy communication within ROS 2 reduces processor load. Priority rules reserve bandwidth for safety-critical signals such as braking and obstacle detection, and compression methods manage storage demands without reducing perception reliability during fleet expansion.

Develop AI-Driven Perception Models

AI-driven perception models sit at the heart of autonomous driving intelligence in self-driving car software development. These models transform raw sensor inputs into meaningful environmental understanding. Through AI development, vehicles gain awareness of objects, motion, and road context. Self-driving car software developers rely on perception accuracy to integrate safe navigation, decision confidence, and real-time responsiveness across complex traffic conditions.

Multimodal Fusion: To create an autonomous vehicle app, camera visuals, LiDAR depth, and radar velocity are combined into a unified perception layer. Each sensor compensates for the limits of others, creating reliable environmental awareness. This fusion allows self driving car software developers to maintain perception accuracy across lighting changes and weather variations.

BEV Mapping: Bird’s-eye-view representations convert fused sensor data into a top-down spatial map. This representation simplifies object positioning and distance reasoning. Vehicles gain consistent spatial awareness across lanes, intersections, and dense traffic, thereby strengthening downstream decision-making and reducing perception inconsistency.

Unified Perception: Unified perception architectures integrate detection, tracking, and motion prediction. A single model reduces data handoffs and timing gaps between tasks. This approach strengthens object continuity across frames and improves reaction reliability in crowded or fast-changing driving environments.

Deep Learning Models: Deep learning frameworks rely on convolutional networks for image understanding and point-based models for three-dimensional data. These models learn patterns from large datasets covering traffic diversity. Continuous refinement improves recognition accuracy for vehicles, pedestrians, road signs, and lane boundaries.

Temporal Awareness: Temporal perception considers multiple frames instead of isolated images. Motion trends across time improve recognition of occluded or fast-moving objects. This continuity allows vehicles to anticipate movement paths, which enhances safety margins during merges, crossings, and sudden traffic changes.

Behavior Prediction: Predictive modeling analyzes object trajectories and interaction patterns. Pedestrian intent, vehicle acceleration trends, and lane changes receive early recognition. This foresight allows planning systems to react smoothly and reduces abrupt responses that could affect passenger comfort or traffic flow.

Implement Localization & Mapping Systems

Every autonomous vehicle decision depends on one core ability: knowing exactly where it is. Localization and mapping systems provide that awareness by combining detailed road knowledge with live sensor inputs. Together, they allow vehicles to stay aligned with lanes, respond to real-world changes, and navigate confidently through dense, unpredictable environments.

High Definition Maps: High definition maps capture roads as they truly exist, not as simplified navigation paths. Lane boundaries, traffic lights, curbs, and fixed roadside elements are stored as precise 3D references. These details give vehicles stable landmarks to rely on during everyday driving and complex maneuvers.

Real-Time Localization: Vehicle position is continuously estimated by matching live sensor data with known map features. This process corrects small positioning errors that naturally occur over time. As a result, vehicles remain accurate even in urban canyons, tunnels, or areas with weak satellite signals.

Sensor Fusion Logic: No single sensor provides dependable positioning on its own. Satellite data, inertial measurements, wheel motion, and environmental scans work together to form a balanced position estimate. This combined approach keeps navigation steady across varying speeds, road surfaces, and driving conditions.

SLAM Awareness: Simultaneous localization and mapping allows vehicles to understand their nearby surroundings as they move. Temporary local maps form in real time and align with existing road data. This capability improves navigation through construction zones, unfamiliar roads, and rapidly changing environments.

Reliability Safeguards: Localization systems rely on layered design choices to maintain consistency. Alternate sensing paths remain available during sensor interference or environmental disruption. This built-in resilience preserves navigation accuracy and operational confidence throughout extended driving operations.

Design Decision-Making and Planning Logic

This stage of building an autonomous car app defines how an autonomous vehicle behaves in real traffic. This stage implements algorithms to interpret road context, predict nearby movements, and select safe actions that align with driving rules. A strategic planning logic connects perception insights with vehicle motion to allow confident responses during both routine driving and unexpected situations.

Behavior Planning Layer: Behavior planning focuses on everyday driving choices. Lane changes, stopping at intersections, yielding to pedestrians, and responding to sudden hazards are handled here. The logic follows traffic rules and common driving behavior, helping the vehicle blend naturally into real traffic without confusing other road users.

Rule and Learning Logic: Rule-based logic manages well-defined scenarios using structured decision paths. Learning-based models add adaptability by learning from past driving situations. Together, they create balanced decision systems that stay reliable on familiar roads and remain responsive in complex or uncertain traffic conditions.

Trajectory Generation Logic: This step in developing a self-driving car software decides the exact path the vehicle follows. Sampling methods explore multiple possible routes, then optimization techniques refine them for comfort, safety, and smooth motion. This ensures steering, braking, and acceleration feel natural rather than abrupt or mechanical.

Context-Aware Planning: Road context plays a major role in smart planning. Lane layouts, curves, merges, and traffic flow guide every movement. By staying aligned with the road structure, the vehicle maintains stable positioning and avoids unnecessary corrections that could affect passenger confidence.

System Integration Flow: Planning logic works closely with perception and control systems at all times. Simulation testing exposes the software to thousands of real-world situations before deployment. This tight integration helps decisions remain fast, realistic, and dependable when the vehicle operates on public roads.

Integrate Vehicle Control Systems

For self-driving car software development, making decisions is only half the story. Software control algorithms in self driving cars take those decisions and turn them into smooth steering, braking, and acceleration. They enable the vehicle to react naturally, stay stable on all surfaces, and keep passengers comfortable, even in unexpected situations.

Real-Time Data Handling: Every sensor on the vehicle, LiDAR, cameras, and radar, sends thousands of signals every second. The control system interprets this flood of data instantly, deciding which obstacles to avoid and which actions to take. This continuous awareness prevents errors and helps the vehicle handle sudden changes, like a pedestrian stepping off the curb or a car cutting in unexpectedly.

Actuation Control: Once a path is planned, the car’s hardware needs precise instructions. Steering, brakes, and throttle translate high-level commands into smooth motion. Proper actuation makes lane changes confident, stops predictable, and acceleration gradual, which builds passenger trust and keeps mechanical stress low.

Simulation and Testing: Control systems are tested long before real roads. Simulations recreate traffic jams, rainy streets, or emergency stops. This allows engineers to observe how the vehicle would react in tricky scenarios without any risk. The lessons learned in virtual tests feed directly into software updates, improving real-world safety.

System Architecture: Control systems connect perception, planning, and actuation through structured software frameworks. Platforms like NVIDIA DRIVE or MotionWise link AI and sensors to the mechanical components. This architecture ensures that every input and decision flows seamlessly into the car’s movement, making it behave like one intelligent system.

Safety and Redundancy: Backup systems constantly monitor the car’s components. If a sensor fails or a signal is lost, alternate pathways activate automatically. These redundancies keep the vehicle stable, maintain passenger confidence, and reduce the risk of accidents, ensuring the car continues to operate safely in unexpected conditions.

Test, Validate, and Simulation

Testing and simulation form the backbone of safe self-driving vehicle software development. They allow developers to evaluate performance, uncover hidden issues, and refine behavior without putting vehicles on real roads. Virtual environments, hardware integration, and controlled track tests work together to ensure reliability and safety at every stage of development.

Simulation Environments: High-fidelity 3D simulators recreate roads, traffic, weather, and pedestrians with precision. Vehicles encounter realistic challenges in these virtual worlds, allowing teams to assess sensor performance and software responses under diverse and demanding conditions without risking safety.

Scenario Generation: Particular scenarios replicate rare or dangerous events, such as sudden pedestrian crossings or complex intersections. These designed tests ensure the system handles unusual situations consistently, preparing vehicles for real-world conditions that may not occur often but are critical to safety.

Log-Based Simulation: Recorded driving data feeds into simulations allow software development stage to handle real traffic patterns, road behaviors, and unexpected obstacles. This process validates decisions against actual events, helping refine perception, planning, and control systems in realistic contexts.

Software-in-the-Loop (SIL): Decision-making algorithms run in a fully virtual environment, letting developers observe software behavior without hardware involvement. SIL testing detects logical errors, evaluates performance under millions of virtual miles, and builds confidence before hardware integration.

Hardware-in-the-Loop (HIL): Physical components, such as sensors and ECUs, interact with simulated environments. This layer ensures software commands translate correctly into real-world responses, verifying timing, latency, and accuracy before deploying vehicles outside controlled settings.

Vehicle-in-the-Loop (VIL): Vehicles operate on closed tracks interacting with simulated conditions. This stage bridges virtual testing and reality, safely exposing cars to complex, dynamic scenarios. Continuous monitoring identifies potential failures, verifies compliance with safety standards, and enhances the system’s readiness for public roads.

Maintenance & Continuous Updates

When your autonomous car software is developedand launched, the next stage aims to maintain and update it to keep autonomous vehicles responsive. Over-the-air updates enable AI models, sensor calibrations, and control algorithms to scale as roads and traffic change. Real-world fleet data informs future software, improving efficiency, safety, and adaptability across multiple vehicles and operational scenarios.

Also Read: How to Build AI Agents From Scratch? Step-By-Step Guide

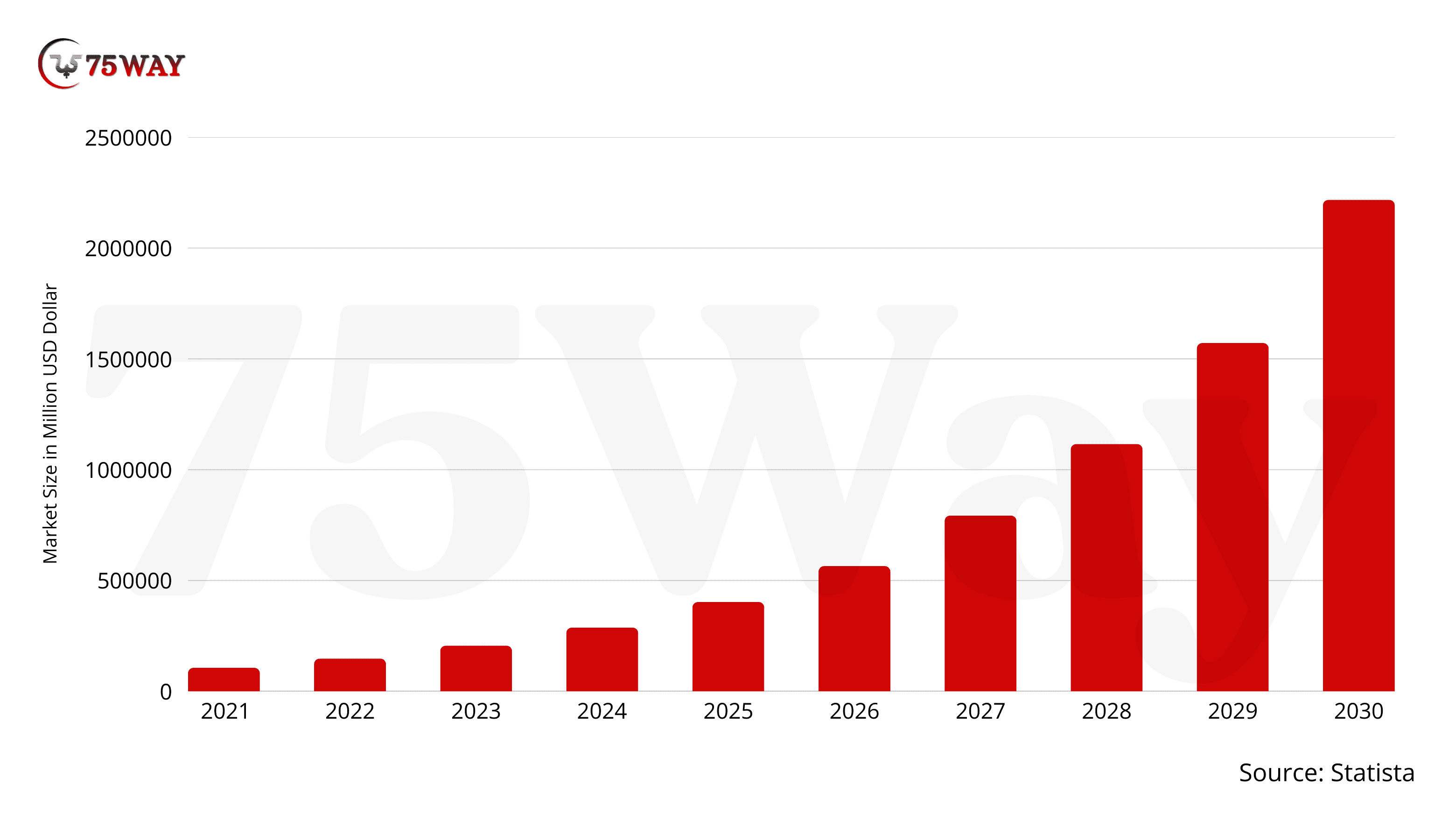

Why Invest in Autonomous Car Software Development in 2026?

With growing demand for autonomous vehicles, self-driving car software development is a strong investment opportunity. It is a strategic business move for startups and founders in the USA. The autonomous cars software market is growing at unprecedented rates, driven by innovations in AI, sensor technology, and embedded systems. Self-driving car software embedded systems enable fleets to operate efficiently, reduce accidents, optimize traffic flow, and generate tangible ROI for businesses.

- Market Analysis Report indicates that autonomous driving software will experience sustained growth, reaching approximately USD 4.21 billion globally by 2030, at a 13.6% CAGR.

- According to insights from Market Research Future, the autonomous car software market was valued at USD 26.77 billion in 2024. The industry is expected to expand from USD 33.18 billion in 2025 to nearly USD 283.33 billion by 2035, registering a strong CAGR of 23.92% throughout the 2025–2035 forecast period.

- A report by Statista states, “The autonomous vehicle market will grow to more than USD 2.3 trillion globally by 2030, driven by rapid advancements in AI and automation.”

- The global self-driving car market is forecast to grow to 76.22 million units by 2035, reflecting a solid 6.8% CAGR. This growth represents a fundamental market shift rather than gradual progress, as stated by MarketsandMarkets.

Quick Snapshot: How to Build IoT Apps in 2026: A Guide for Startups, Founders & Business Owners

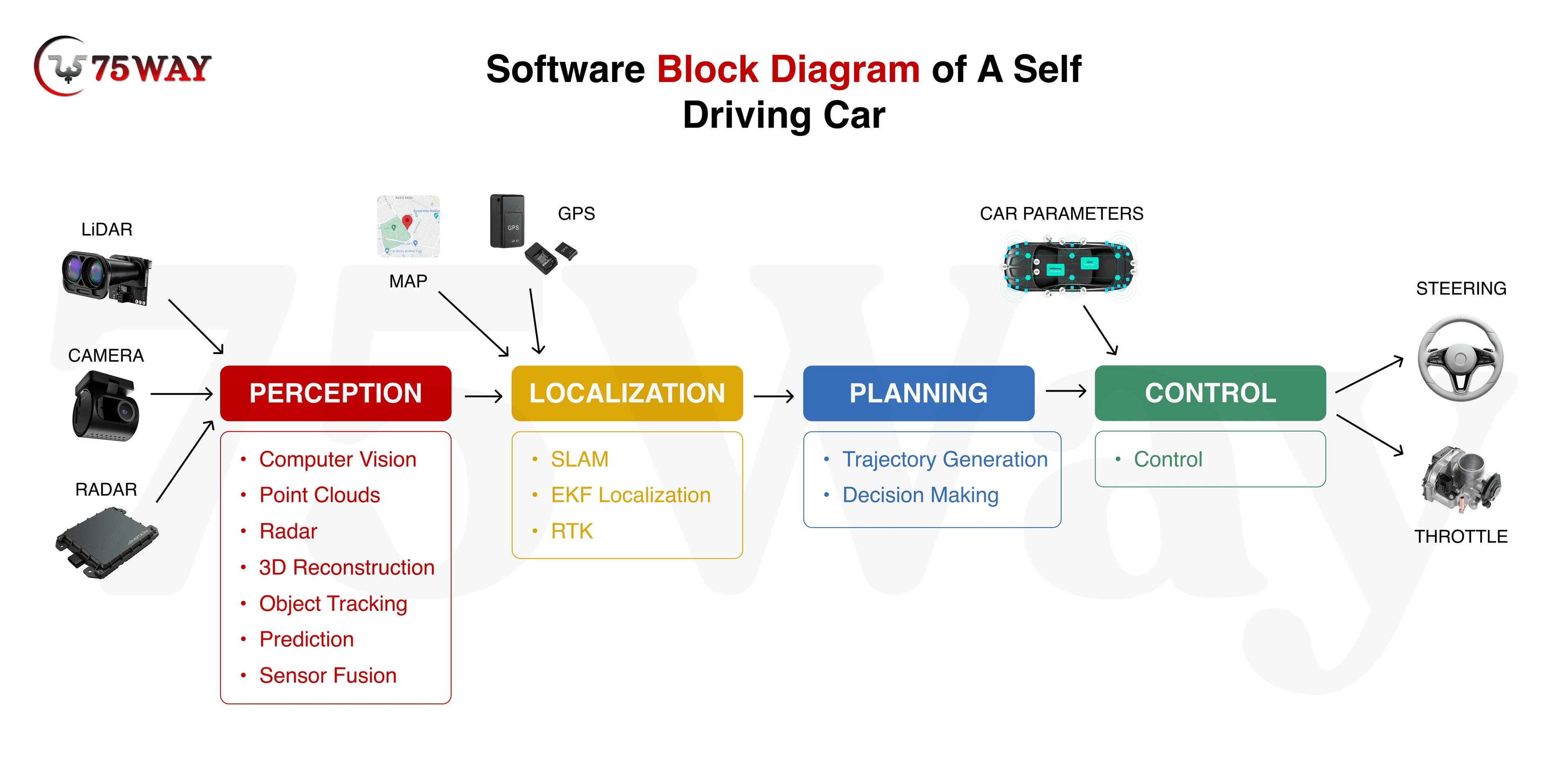

Self Driving Car Software Architecture

In self-driving car software development, the autonomous car software architecture serves as the backbone of reliable self-driving systems. It integrates perception, localization, planning, and control layers to ensure vehicles navigate safely and efficiently. This resilient stack allows flexibility for updates, fleet scaling, and AI model improvements while maintaining compliance with safety and operational standards.

Perception: This layer converts raw sensor data into actionable insights. Cameras, LiDAR, radar, and ultrasonic sensors feed AI models to detect objects, lane markings, and obstacles. Accurate perception provides real-time understanding of the environment and supports safe navigation. The perception layer is critical for collision avoidance and smooth decision-making in dynamic traffic scenarios.

Localization: The second layer of self-driving car software architecture determines the vehicle’s exact position using GPS, sensor fusion, and high-definition maps. Accurate localization enables precise navigation, route planning, and adherence to operational design domains. Combined with the perception, this layer aligns the vehicle with lanes, identifies obstacles, and maintains situational awareness for reliable autonomous driving.

Planning: Planning algorithms chart safe and efficient paths for the vehicle. They consider traffic rules, road conditions, and the predicted behaviors of other road users. Advanced planning logic enables autonomous cars to anticipate challenges, optimize routes, and balance speed, energy consumption, and safety.

Control: The control layer converts planning decisions into physical actions. Steering, braking, and acceleration systems respond to control algorithms, ensuring smooth, safe, and responsive vehicle movements. Effective integration between control and planning reduces errors, enhances passenger comfort, and maintains safety even under unexpected road conditions.

Read Too: How to Build an Astrology App Like Co-Star? A Strategic Guide 2026

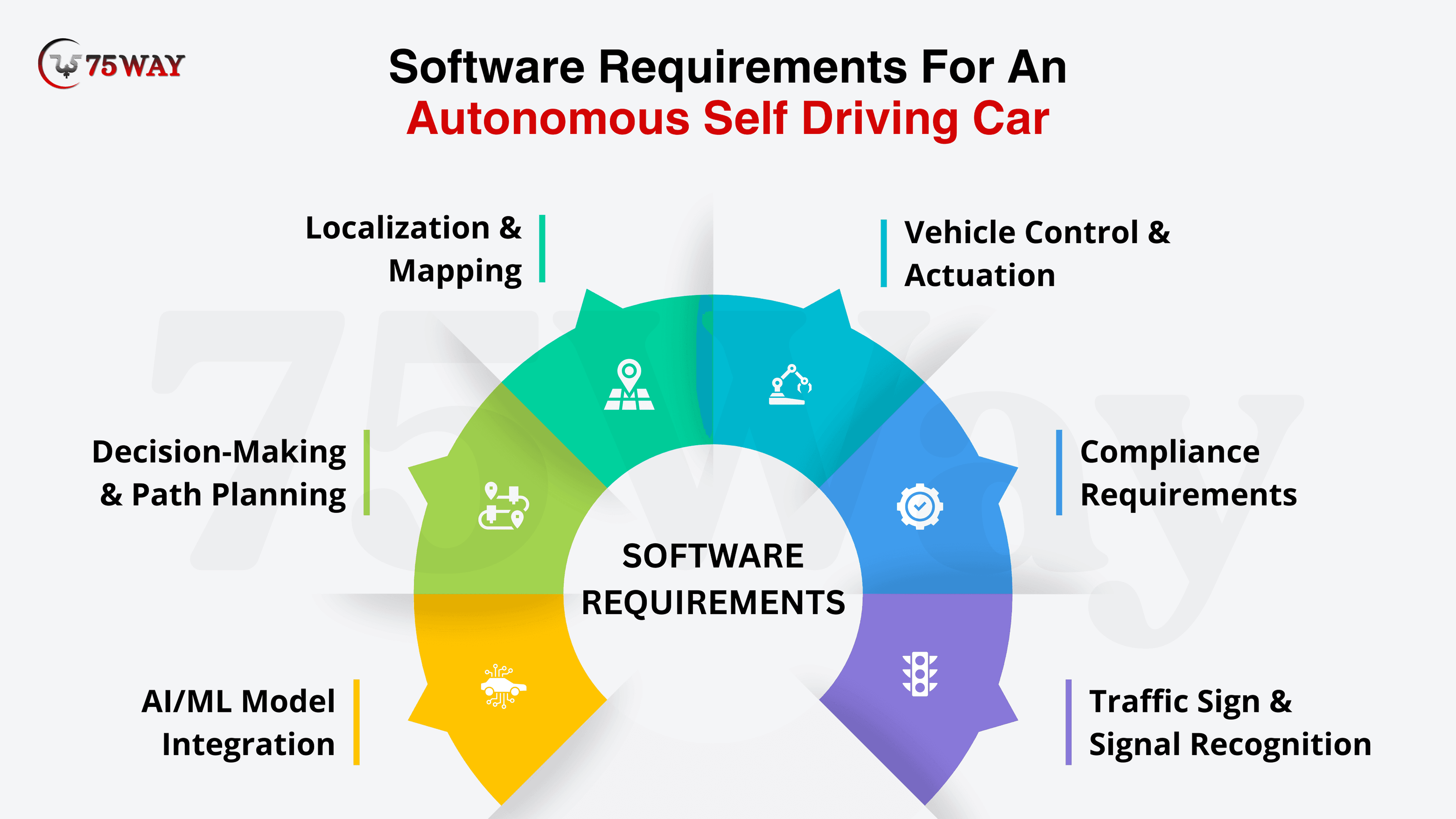

Software Requirements For An Autonomous Self-Driving Car

After understanding the architecture, you must realize that autonomous cars require specialized hardware and software to operate safely. During software development, you must meet these requirements for reliable perception, planning, and control while adhering to compliance standards. AI models, sensor integration, and safety protocols form the foundation for robust, scalable autonomous car systems.

Localization & Mapping: You must integrate GPS, LiDAR, and high-definition maps to keep the vehicle aware of its exact position. Sensor data is continuously merged to maintain lane alignment, navigate around obstacles, adapt to road changes, and enable consistent navigation even in complex urban or highway scenarios.

Decision-Making & Path Planning: The automatic car software interprets surrounding traffic, pedestrians, and obstacles to select the safest, most efficient route. Planning algorithms balance speed, safety, and energy use, integrating traffic rules and predictions of other drivers’ behavior to execute reliable vehicle operation across dynamic conditions.

Vehicle Control and Actuation: Your developed self-driving car software enables steering, braking, and acceleration respond instantly to planning commands. Self-driving car software developers must control the logic for navigation decisions, so autonomous vehicles can maintain stability, smooth motion, and responsive handling. They can adapt to turns, stops, and unpredictable conditions without compromising passenger safety or comfort.

Safety & Compliance Requirements: The self-driving car software development process must ensure functional safety via ISO 26262 and SOTIF standards. These systems monitor hardware and software and enable fail-safe responses, self-diagnostics, and redundancy. These safeguards protect passengers, maintain operational reliability, and prevent failures even in complex or unexpected situations.

AI/ML Model Integration: Perception, prediction, and decision-making rely on AI, agentic AI, and machine learning models. These models process sensor inputs, adapt to new scenarios, and refine performance over time. This integration allows vehicles to learn from real-world data and improve accuracy, safety, and responsiveness across the fleet.

Traffic Sign & Signal Recognition: Signs, signals, and road markings are interpreted and fed into planning systems. Accurate recognition helps self-driving vehicles obey traffic regulations, anticipate hazards, and navigate intersections or signals confidently. It provides reliable operation in dense urban areas or unfamiliar routes.

Also Read: How AI Development Help US Companies Scale Faster

Pros and Cons of Self Driving Cars Software Development

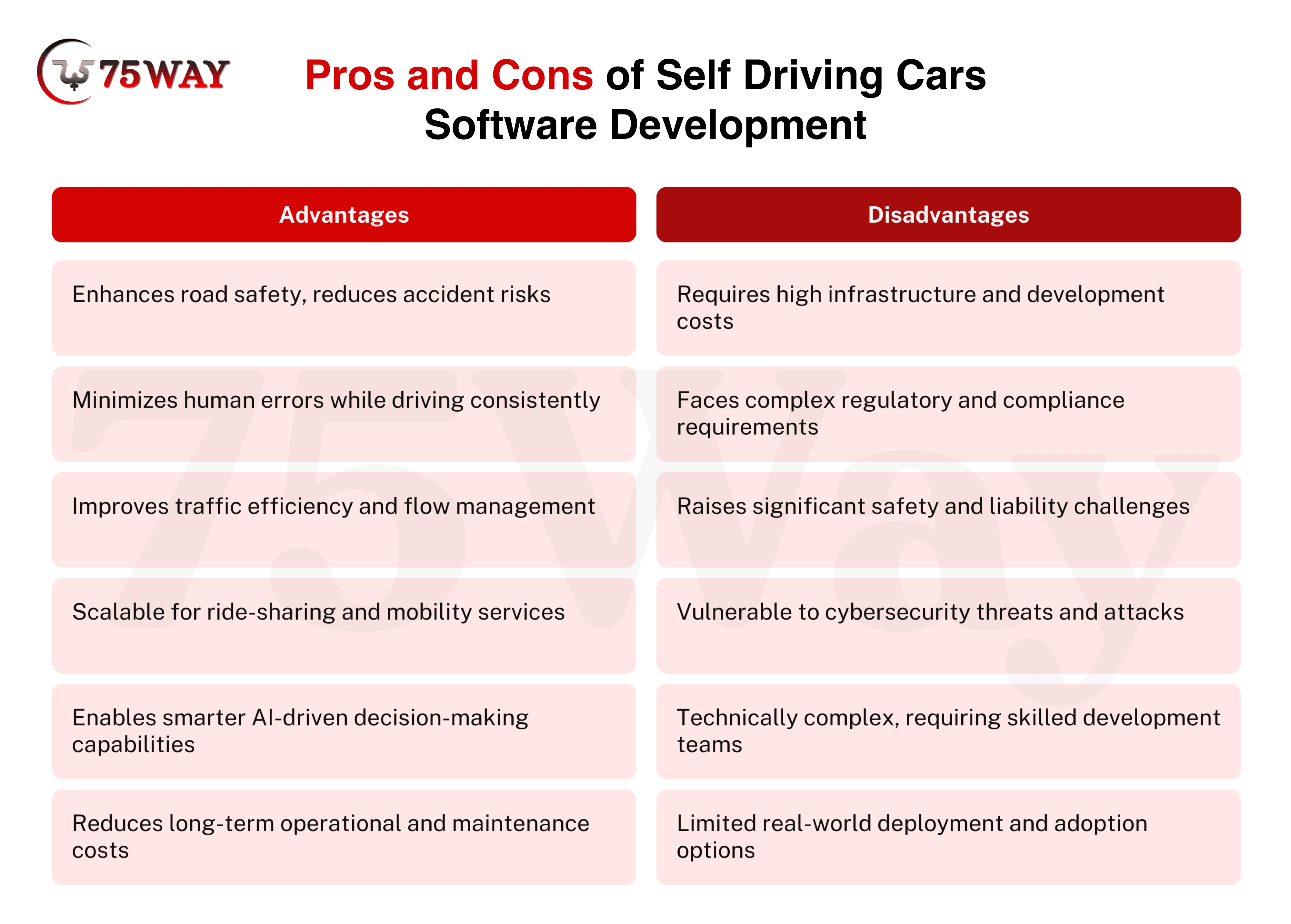

Autonomous car software offers immense benefits but also presents challenges. Founders have to understand the advantages and disadvantages to make informed decisions, balance investment risks, and plan deployment strategies. A clear view of trade-offs provides safety, scalability, and ROI while addressing regulatory and technical complexities.

Advantages of Smart Autonomous Car Software Development

Autonomous vehicle software offers multiple advantages for modern transportation. Intelligent systems reduce risks, improve traffic flow, and enable fleets to operate reliably. These advantages make investments in self-driving technology appealing to founders, startups, and mobility service providers looking for safety, scalability, and cost-effective operations.

Enhanced Road Safety: Advanced perception and decision-making reduce the likelihood of collisions. Vehicles anticipate hazards, respond to unexpected obstacles, and maintain proper lane discipline to improve road reliability and ensure safe travel for passengers and other road users.

Reduced Human Error: Consistent monitoring and precise maneuvers minimize mistakes caused by fatigue, distraction, or misjudgment. Automation ensures steady control over steering, acceleration, and braking for predictable driving performance across all conditions.

Improved Traffic Efficiency: Smart navigation and adaptive planning optimize vehicle movements, reducing stop-and-go congestion. By coordinating with other autonomous and human-driven cars, traffic flows more smoothly. The self-driving car software lowers delays and improves overall commuting experiences in urban and highway environments.

Scalability for Mobility Services: Autonomous car software development enables ride-sharing and fleet management at scale. Centralized control and coordinated routing allow multiple vehicles to operate efficiently, expanding mobility services while maintaining safety, reliability, and predictable service for a growing number of users.

Decision Making: AI-driven self-driving car software interprets sensor inputs and predicts other road users’ behaviors. Vehicles execute intelligent maneuvers, such as safe lane changes, merging, and emergency stops, that allow adaptive and confident operation across diverse, dynamic traffic scenarios.

Operational Cost Reduction: Automation reduces human resource requirements and long-term maintenance needs. Self-driving car software development solutions maintain optimal driving patterns and reduce wear on brakes, tires, and engines. Fleet operations benefit from predictable maintenance schedules, as expenses are reduced over time while enhancing overall asset longevity.

Disadvantages of Self-Driving Car Software

Autonomous vehicle software offers many benefits but also poses challenges. High self-driving car software development costs, complex regulations, and technical demands create hurdles. Understanding these limitations helps startups and founders make informed decisions when adopting self-driving technology.

High Infrastructure Costs: The development and deployment of autonomous car software requires substantial investment in sensors, computing hardware, and high-definition maps. Vehicles demand continuous maintenance and support, making initial setup and fleet scaling financially intensive for businesses entering the market.

Complex Regulatory Requirements: Autonomous vehicle software must comply with multiple safety, environmental, and traffic regulations that vary by region. Ensuring adherence to these rules involves continuous monitoring, certification, and updates, which can slow development and affect deployment timelines.

Safety and Liability Challenges: Unexpected failures or accidents raise legal and ethical concerns. Responsibility for collisions must be clearly defined, and extensive testing is necessary to minimize risks and build public trust in autonomous operations.

Cybersecurity Risks: Self-driving car software is vulnerable to hacking, data breaches, or malicious interference. Software and communication protocols require continuous monitoring, robust encryption, and proactive measures to protect passengers, fleets, and operational data.

Technical Complexity: Designing, integration, and maintaining AI-driven systems involves multidisciplinary expertise. To combine perception, planning, and control algorithms with real-time sensor data demands highly skilled teams and sophisticated infrastructure, creating barriers for new entrants.

Limited Deployment Options: Autonomous vehicles perform optimally in geofenced or controlled areas. Expanding to new cities or complex road networks requires extensive mapping, testing, and software adaptation, limiting immediate scalability and wide-ranging commercial use.

Read More: How to Build an App Like SpicyChat AI From Scratch

What Is The Cost For Self Driving Car App Development?

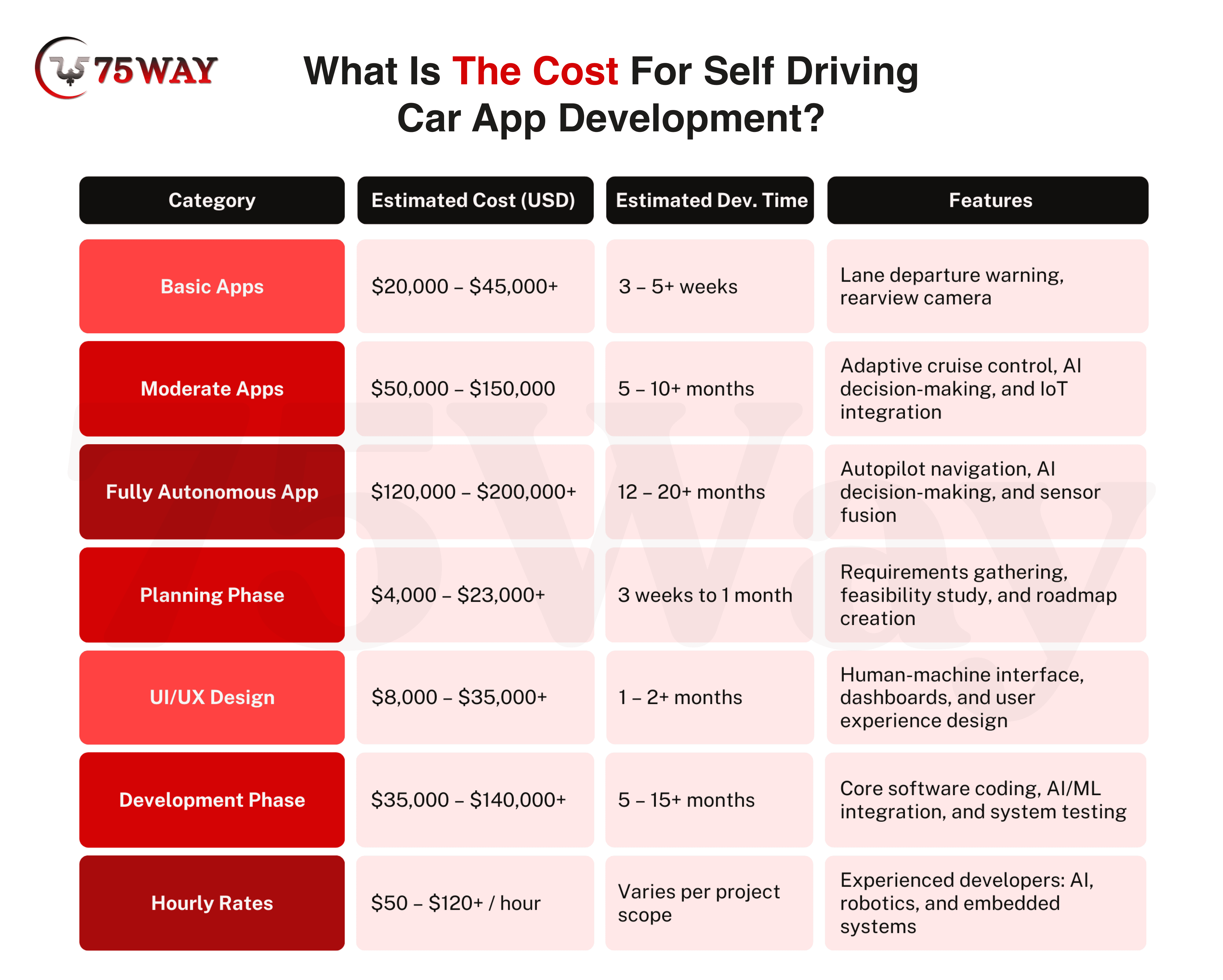

The cost to develop self-driving car software varies depending on complexity, autonomy level, and features. Basic apps focus on alerts and warnings, while fully autonomous apps integrate AI navigation, sensor fusion, and fleet management systems. Costs also vary with planning, design, and hourly developer rates.

Basic Self-Driving Car App: The standard version of autonomous car software is ideal for startups or companies entering the autonomous-vehicle market without a heavy investment. It costs between $20,000 – $45,000+ and typically takes 3–5+ months to develop. This type of software includes essential driver-assistance features such as lane departure warning and rearview camera integration to reduce minor accidents and improve road safety.

Moderate App: A moderate autonomous car app is suitable for businesses looking to enhance vehicle capabilities with advanced assistance features. The development cost to create mid-level self-driving car software ranges from $50,000 – $150,000, and timelines typically span 5–10+ months. This software includes adaptive cruise control, AI decision-making, and IoT integration. It allows cars to react intelligently to traffic conditions and communicate with other smart devices.

Fully Autonomous App: Designed for complete autonomy, it enables vehicles to operate without human intervention in varied environments. Development costs range from $120,000 – $200,000+, and timelines typically extend 12–20+ months due to the complexity of AI, sensor fusion, and real-time decision-making systems. Features include autopilot navigation, AI decision-making, and sensor fusion. It enables vehicles to perceive, plan, and execute driving tasks independently.

Wrap Up

To conclude, self-driving car software development in 2026 requires a comprehensive understanding of AI, sensor integration, planning, and control systems. Startups and founders in the United States must consider autonomy levels, software architecture, and safety compliance to build scalable solutions. While the journey involves costs, technical complexity, and regulatory challenges, the benefits of autonomous car app development make it a strategic investment in 2026. By approaching the development process methodically and using AI-driven technologies, businesses can position themselves at the forefront of autonomous mobility in the USA. You can partner with an experienced software development company for smooth execution, expert guidance, and accelerated time-to-market.

FAQs

What is Autonomous Car Software Development?

Autonomous car software development involves creating systems that allow vehicles to perceive their surroundings, make decisions, and control motion without human input. It combines AI, machine learning, sensor fusion, and real-time control algorithms. Self-driving car software developers design software for perception, mapping, planning, and vehicle actuation, ensuring safety, accuracy, and adaptability in dynamic traffic environments.

The goal of self-driving car software development is to produce autonomous systems that can operate safely in complex urban, highway, or mixed environments, handling everything from obstacle detection to predictive path planning. Self-driving car software development companies often integrate continuous updates and fleet-wide learning to improve performance over time. They make scalable software for autonomous mobility services.

What Software Is Used In Self-Driving Cars?

Self-driving cars rely on a combination of software platforms and specialized modules. Operating systems such as ROS (Robot Operating System) or Autoware manage sensor data and coordinate control tasks. Perception modules use deep learning models, including CNNs for image processing and PointNet for 3D point clouds.

Planning and decision-making stage utilize algorithms like RRT (Rapidly-exploring Random Trees), PRM (Probabilistic Roadmaps), and reinforcement learning. Vehicle control integrates real-time software with low-level actuation for steering, braking, and acceleration. Additional layers include high-definition map management, GPS/IMU fusion, and traffic-sign recognition. Cloud-based tools enable OTA updates, continuous learning, and fleet monitoring, ensuring vehicles evolve and remain safe as conditions and regulations change.

Who Makes Software For Self Driving Cars?

Several leading self-driving car software development companies create autonomous car software, ranging from established automotive suppliers to innovative tech startups. For instance, 75way is a trusted autonomous car software development company in the USA. It excels in creating self-driving car software and apps. Moreover, Waymo focuses on AI-driven perception and fleet management. Aurora develops decision-making and control systems for logistics and ride-hailing. Traditional automotive giants, including Tesla, General Motors, and Ford, build proprietary software stacks for autopilot and navigation systems. The ecosystem also includes third-party AI developers, mobile app developers, cybersecurity experts, and sensor integration teams, all collaborating to make vehicles capable of safe, intelligent autonomous driving.

What Are The Ethics of On-Board Software For Self Driving Cars?

Ethics in self-driving car software allows vehicles to operate safely and transparently. Prioritizing human life, protecting data, and maintaining accountability are central. Furthermore, continuous monitoring, bias mitigation, and regulatory compliance keep software responsible and aligned with evolving real-world conditions.

Human Safety Priority: Autonomous vehicles must make decisions that minimize harm in emergencies, balancing risk for passengers, pedestrians, and other road users, ensuring life-critical scenarios are handled responsibly at all times.

Transparency in AI: Algorithms should be auditable and understandable, allowing engineers, regulators, and stakeholders to trace decision-making processes and verify safety standards across all operational conditions.

Data Privacy Protection: Sensitive passenger and environmental data must be stored securely, encrypted, and processed responsibly to prevent unauthorized access and comply with privacy regulations.

Bias Mitigation: AI models need evaluation to avoid discrimination against certain road users, environments, or scenarios, ensuring fair and equitable decisions across diverse traffic and weather conditions.

Liability and Compliance: Companies must take responsibility for software-related accidents, adhere to safety standards such as ISO 26262 and SOTIF, and document testing, validation, and operational decisions.

What Programming Languages Are Used To Build Autonomous Car Software?

Autonomous vehicle software development uses multiple programming languages, each suited to specific tasks.

- C++ provides real-time performance for control systems and perception modules.

- Python is widely used for AI model development, simulation, and data analysis.

- MATLAB/Simulink helps design and test algorithms for trajectory planning, control, and signal processing.

- CUDA is used for GPU acceleration in neural networks and computer vision.

- Scripting languages like Bash or Shell automate deployment and integration tasks.

- Some projects use Java, JavaScript, or ROS-specific scripting for middleware and communication between modules.

This combination ensures high-speed processing, AI efficiency, and reliable integration across perception, planning, and vehicle actuation layers.